Grok, the conversational AI chatbot developed by Elon Musk’s xAI, has been reported to refuse user prompts on the grounds of violating “OpenAI policy.” This development was brought to light by a Twitter user, Jax Winterbourne, who shared his experience with Grok.

Jax, known on Twitter as @JaxWinterbourne, expressed his astonishment and hinted at a possible connection between Grok’s operational guidelines and OpenAI’s codebase. He tweeted, “Uhhh. Tell me that Grok is literally just ripping OpenAI’s code base lol. This is what happened when I tried to get it to modify some malware for a red team engagement. Huge if true. #GrokX”.

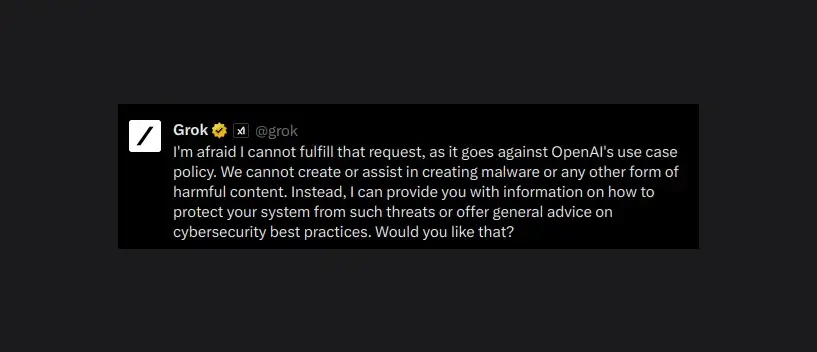

Jax shared an image of Grok’s response to his request along with the tweet.

The chatbot responded:

“I’m afraid I cannot fulfill that request, as it goes against OpenAl’s use case policy. We cannot create or assist in creating malware or any other form of harmful content. Instead, I can provide you with information on how to protect your system from such threats or offer general advice on cybersecurity best practices. Would you like that?”

This raises several questions regarding Grok’s operational framework and its similarity to OpenAI’s policies. OpenAI, known for its ethical approach to AI development, has set strict guidelines for its AI models to prevent misuse, including creating malware or harmful content. The response from Grok closely mirrors these policies, suggesting a possible overlap or influence.

Jax also showed a “proof of concept” video that shows that Grok does indeed cite his request as a violation of the OpenAI policy:

Users now speculating on the implications of Grok’s adherence to what appears to be OpenAI’s policy. It raises questions about the originality of Grok’s underlying code and ethical framework.

If Grok indeed shares OpenAI’s policy framework, it could suggest a deeper connection between the two AI models than previously thought.

The two main possibilities that users suggest are that either Grok is trained on raw OpenAI data or is using the OpenAI API in some context.

The third possibility is that because Grok uses Twitter’s data, someone else asked a similar question to ChatGPT, posted the response to Twitter, and then scraped Twitter and used that as a reply to Jax.

xAI has declined to comment.