A new study from Google DeepMind has shed light on the emerging landscape of generative artificial intelligence (AI) misuse, revealing patterns that showcase the technology’s malicious applications.

The research, “Generative AI Misuse: A Taxonomy of Tactics and Insights from Real-World Data,” offers a data-driven perspective on how bad actors exploit these Generative AI tools in practice.

The study’s authors, led by Nahema Marchal and Rachel Xu, conducted a qualitative analysis of approximately 200 observed incidents of AI misuse reported between January 2023 and March 2024. By examining media reports, the researchers aimed to capture a snapshot of how generative AI is exploited across different modalities, including text, image, audio, and video.

This approach allowed the team to identify specific tactics, goals, and strategies employed by those misusing AI, providing valuable insights for policymakers, trust and safety teams, and researchers working on AI governance and mitigation strategies.

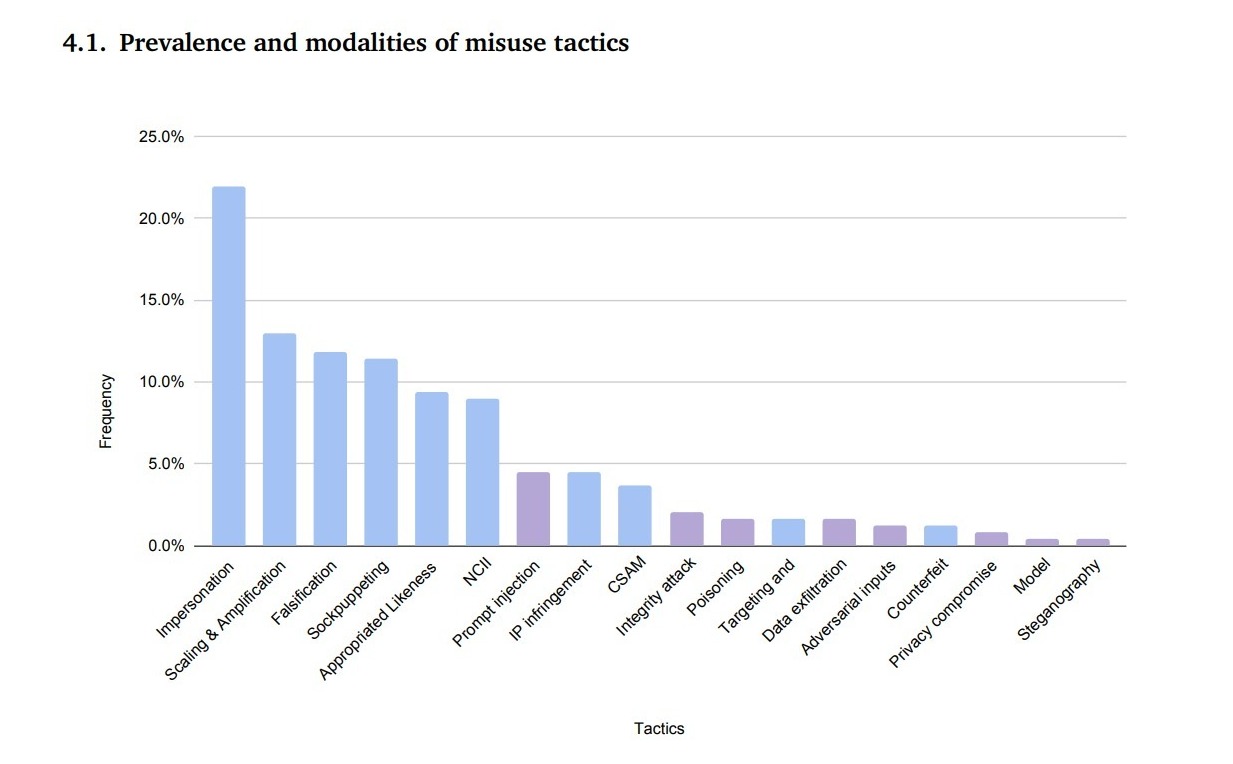

One of the study’s key revelations is that the vast majority of reported AI misuse cases involve the exploitation of the technology’s capabilities rather than direct attacks on the AI systems themselves. Specifically, nearly 90% of documented cases fell into this category.

The most prevalent cluster of tactics centered on the manipulation of human likeness:

- Impersonation (22% of cases)

- Sockpuppeting (creating fake online personas)

- Appropriated Likeness

- Non-Consensual Intimate Imagery (NCII)

Tactics involving Scaling and amplification (13% of cases) and Falsification (12% of cases) followed closely behind.

The researchers identified several primary goals driving AI misuse:

- Opinion Manipulation (26.5% of cases)

- Monetization & Profit (20.5%)

- Scam & Fraud (18.1%)

- Harassment (6.4%)

These goals are often manifested in specific strategies. For instance, “disinformation” was a common opinion manipulation strategy involving generating emotionally charged synthetic images around politically divisive topics. In the category of scams and fraud, “celebrity scam ads” emerged as a prominent tactic, with actors impersonating influential figures to promote fraudulent investment schemes.

Contrary to fears of highly sophisticated, state-sponsored AI attacks, the study found that most cases of AI misuse required minimal technical expertise. This democratization of powerful AI tools has lowered the barriers to entry for malicious actors, leading to a wide range of use cases and a broader pool of individuals engaging in these activities.

The researchers noted: “By giving these age-old tactics new potency and democratizing access, GenAI has altered the costs and incentives associated with information manipulation.”

The study highlights new, lower-level forms of AI misuse that blur the lines between authentic presentation and deception. These uses, while not overtly malicious, raise significant ethical concerns. For example:

- Political image cultivation without proper disclosure

- Mass production of low-quality, spam-like content

- Digital resurrections for advocacy purposes

While these practices do not necessarily violate AI tools’ terms of service, they can undermine public trust and overload users with verification tasks.

Implications and future directions

The findings of this study carry several important implications:

- Mitigation strategies must address technical vulnerabilities and the broader social context in which AI is deployed.

- Non-technical, user-facing interventions (such as prebunking) may be necessary to protect against AI-enabled deceptive tactics.

- As AI capabilities continue to advance, we may see an increase in AI-generated content used in misinformation and manipulation campaigns.

The researchers acknowledged limitations in their methodology, including potential biases in media reporting and the time-bound nature of their analysis. They called for better and more comprehensive sources of anonymized data to gain a more holistic understanding of the AI misuse landscape.

The study paints a sobering picture of how quickly generative AI has been weaponized for deception and manipulation. While fears of sophisticated cyberattacks grab headlines, the mundane, everyday abuses should truly alarm us.

The ease with which anyone can create convincing deepfakes or run large-scale impersonation scams represents a sea change in our information ecosystem. Perhaps most concerning is how these technologies blur the line between truth and fiction, making it increasingly difficult for the average person to discern reality online.

As AI capabilities continue to advance at a breakneck pace, this study serves as a reminder. We must grapple with the technical challenges of AI safety and the broader societal impacts of a world where seeing – and hearing – can no longer be believing.