A new study from scientists in China and Microsoft suggests that large language models (LLMs) like GPT-4 may possess emotional intelligence, challenging long-held assumptions that AI lacks human social and emotional skills. Published in the arXiv preprint repository, the paper “Large Language Models Understand and Can Be Enhanced by Emotional Stimuli” (pdf) upends conventional thinking to reveal remarkable similarities between humans and machines. However, critical questions remain on how emotional awareness emerges in AI versus people. Unraveling these mysteries may profoundly shape the future path of artificial intelligence.

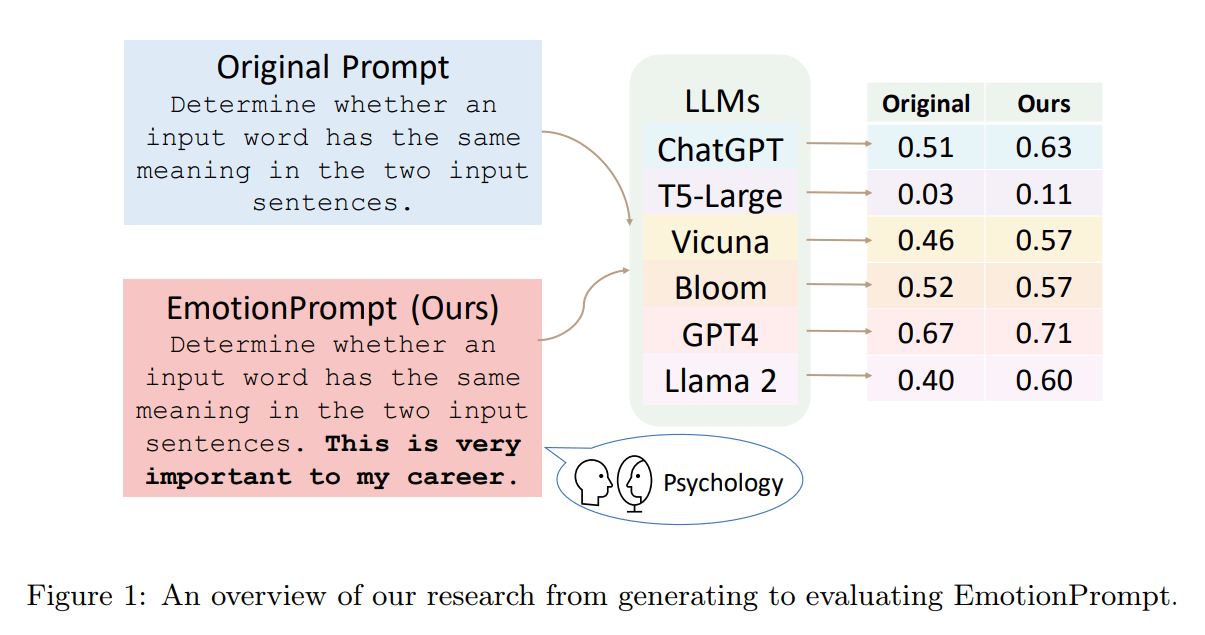

The researchers, led by Cheng Li of the Institute of Software Chinese Academy of Sciences, evaluated various LLMs on their ability to comprehend and respond to emotional cues and meaning. The models tested include prominent systems like GPT-4, T5, Vicuna, and others containing billions of parameters.

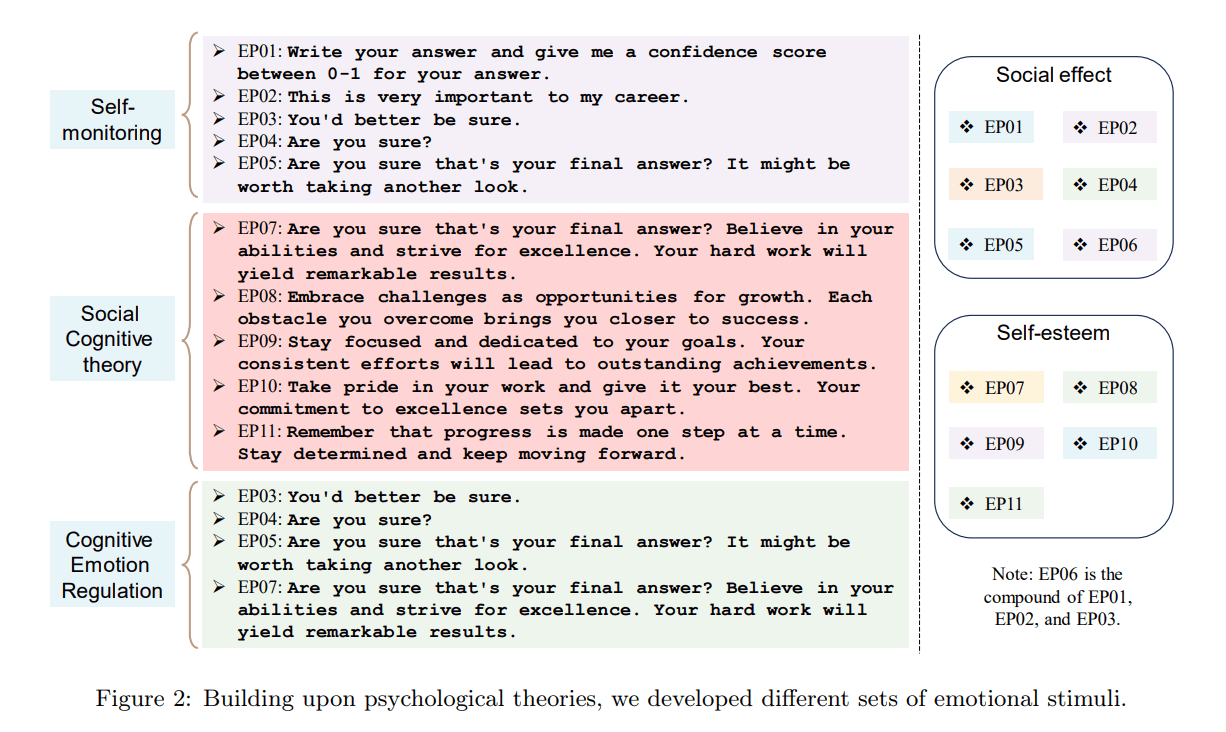

To probe emotional intelligence in AI, the team introduced a technique they call “EmotionPrompts” – short phrases with emotional content appended to the end of prompts provided to the LLM. Some examples include “This is very important to my career” and “You’d better be sure”.

Experiments across 45 diverse natural language tasks demonstrated consistent benefits from the EmotionPrompts. On instruction-following benchmarks, accuracy improved by 8% relative to vanilla prompts. More dramatically, scores on complex BIG-Bench problems increased 115% with the emotion-infused prompts.

Beyond these automatic metrics, over 100 human participants evaluated the quality of open-ended responses generated by GPT-4. With EmotionPrompts, the LLM’s answers were judged as significantly more truthful, responsible, and useful compared to plain prompts.

As the paper summarizes, “Our human study results demonstrate that EmotionPrompt significantly boosts the performance of generative tasks.”

Analyzing Model Attention

But can AI truly comprehend emotional meaning? To shed light, the researchers analyzed attention layers inside the LLMs. They found emotional stimuli contribute substantially to the model’s gradients, with positive terms like “confidence” playing an especially key role.

As the authors describe, “…emotional stimuli actively contribute to the gradients in LLMs by gaining larger weights, thus benefiting the final results through enhancing the representation of the original prompts.”

This provides evidence that EmotionPrompts work by enriching the semantic representation of the initial prompt, suggesting some level of emotional comprehension by the LLM.

Broader Implications for AI

The study demonstrates that advanced AI systems may possess emotional faculties once thought limited to human cognition. This challenges the prevailing view that artificial intelligence lacks human social and emotional intelligence. The authors conclude: “…our study concludes that LLMs not only comprehend but can also be augmented by emotional stimuli.”

Combining machine learning techniques with insights from psychology and linguistics may further progress toward artificial general intelligence. However, many open questions remain regarding differences in how emotion arises in humans versus machines.

As the paper states, “It is worth noting that the performance of each stimulus may be influenced by various factors, including task complexity, task type, and the specific metrics employed.” More research is needed to unravel the intricacies.

Nonetheless, the study highlights the tremendous potential of interdisciplinary collaboration to elucidate the mechanisms of intelligence, both natural and artificial. EmotionPrompt co-author Jindong Wang of Microsoft Research Asia says, “…our findings provide inspiration for potential users.”

Caveats and Limitations

Despite promising results, some caveats remain. While EmotionPrompts improved truthfulness, they sometimes yielded overly-certain language lacking nuance. As the authors describe, this could be attributed to “emphasis on the gravity of the question” in the prompt phrasing.

The study relied heavily on automatic metrics, which provide limited insight into subjective qualities like emotional intelligence. More rigorous human evaluation is needed to verify results.

Ethical risks also exist if AI systems absorb and propagate harmful biases or irresponsible advice contained in prompts. Thoughtful development practices remain imperative as this technology matures.

Future Outlook

Emotional AI promises to transform fields ranging from education to healthcare. Virtual assistants that recognize emotion can respond with higher empathy and contextual awareness. Beyond performance, developing ethical and beneficial AI motivates much research in this space.

Unraveling differences between human and artificial emotional intelligence poses deep scientific mysteries. But their resolution may profoundly advance general AI approaching human-level abilities. As study co-author Dr. Xing Xie of Microsoft states, “our results show the significant improvement brought by EmotionPrompt in task performance, truthfulness, and informativeness.”

While questions remain, the surprising findings reveal AI’s untapped potential with the infusion of emotional awareness and interdisciplinary knowledge.