Renowned tech entrepreneur Elon Musk has unveiled his latest venture: a company named xAI, with the audacious goal of understanding the “true nature of the universe.”

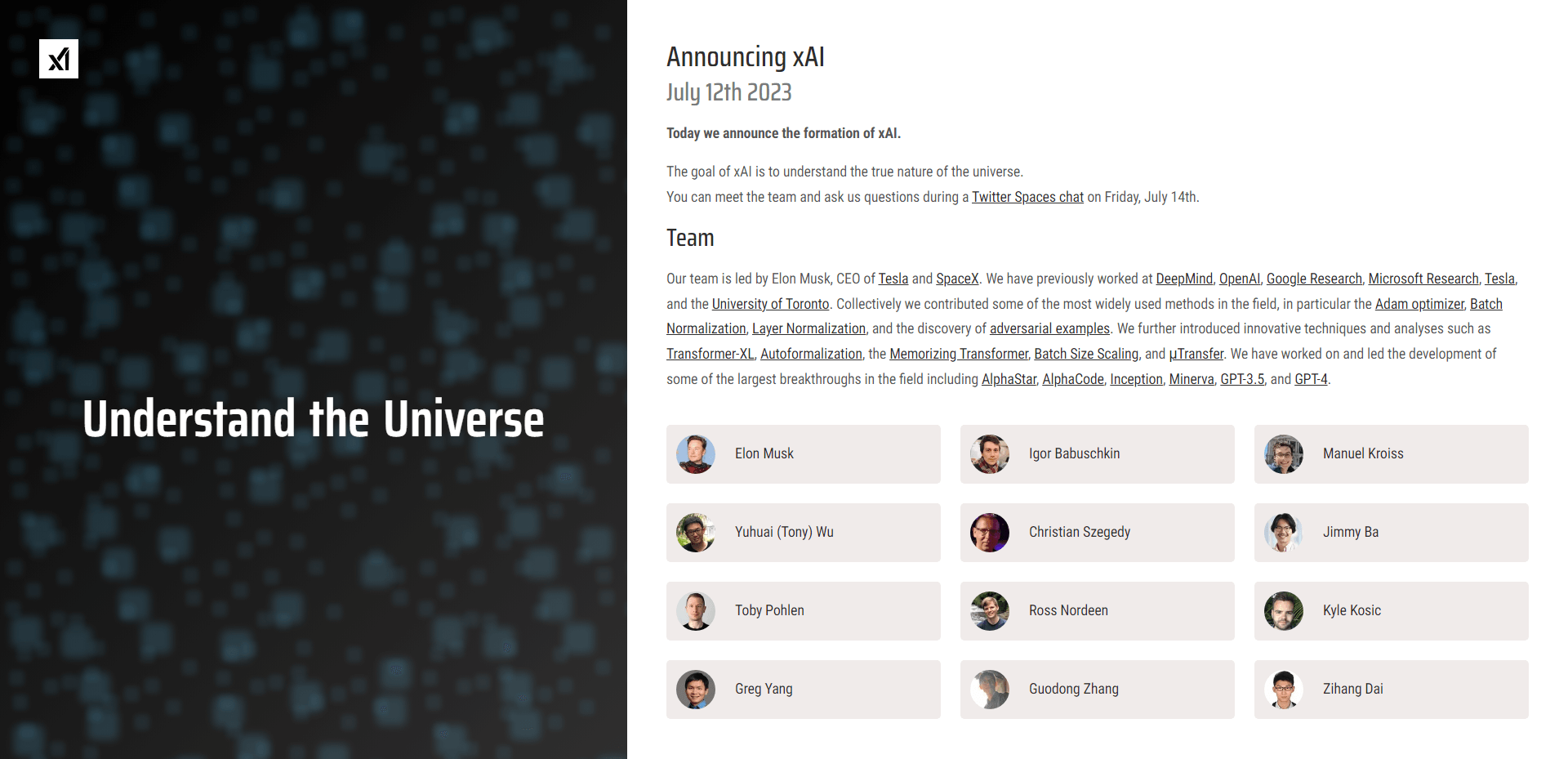

The announcement came this morning, with Musk outlining the company’s vision and its all-star team, composed of veterans from tech giants such as DeepMind, OpenAI, Google Research, Microsoft Research, and Tesla, along with academia, particularly the University of Toronto. The team boasts a wealth of accomplishments, having contributed to the development of AI methods such as the Adam optimizer, Batch Normalization, and Layer Normalization. Their experience includes high-impact projects like AlphaStar, AlphaCode, Inception, Minerva, and the renowned language models, GPT-3.5 and GPT-4.

Musk, the CEO of both Tesla and SpaceX, will lead this team, which includes Igor Babuschkin, Manuel Kroiss, Yuhuai (Tony) Wu, Christian Szegedy, Jimmy Ba, Toby Pohlen, Ross Nordeen, Kyle Kosic, Greg Yang, Guodong Zhang, and Zihang Dai. They will be advised by AI safety expert Dan Hendrycks, who currently serves as the director of the Center for AI Safety.

In a brief statement, Musk clarified xAI’s relation to other companies: “We are a separate company from X Corp, but will work closely with X (Twitter), Tesla, and other companies to make progress towards our mission.”

The exact nature of xAI’s planned operations remains undisclosed. However, given Musk’s track record and the team’s combined AI prowess, industry watchers predict the new venture could revolutionize artificial intelligence.

In addition to unveiling its mission and team, xAI has also announced that it is actively recruiting experienced engineers and researchers to join its ranks in the Bay Area. Interested candidates have been directed to fill out an application form on the company’s Twitter page.

The public will have an opportunity to learn more about xAI during a Twitter Spaces chat scheduled for Friday, July 14th, during which Musk and his team will field questions.

Today’s announcement has sparked immense interest in the tech and science communities, leaving many to wonder what breakthroughs might be on the horizon as xAI pursues its lofty quest to “comprehend the universe’s mysteries.”