Google said today that a YouTube campaign has used AI-generated news readers to influence people. The “news readers” appeared earlier this year in videos about Taiwanese President Tsai Ing-wen. Google revealed that this influencer campaign, which targeted Taiwanese users, was the largest it had observed so far from the underlying group.

The campaign, attributed to a group known as DRAGONBRIDGE, was part of a broader effort to spread pro-Chinese narratives and criticize Taiwan’s outgoing president. According to Google’s Threat Analysis Group (TAG), thousands of videos and comments were posted on YouTube in the days leading up to Taiwan’s general election on January 13, 2024.

DRAGONBRIDGE, also known as “Spamouflage Dragon,” is described by Google as the most prolific influence operation (IO) actor they track. The group, linked to the People’s Republic of China, has a presence across multiple platforms and produces a high volume of content. However, Google reports that despite the group’s efforts, its content receives very little engagement from real users.

The video’s AI-generated news hosts varied in quality, with some appearing quite realistic at first glance, while others were obviously computer-animated. The content promoted a false “secret history” document critical of President Tsai Ing-wen and included direct calls to action, such as songs with lyrics telling people not to vote for Taiwan’s Democratic Progressive Party.

The videos created by the group have previously been caught and covered by local Taiwanese news agencies.

Google’s TAG observed that the campaign extended beyond YouTube, with similar content pushed on other platforms, including X (formerly Twitter), Reddit, Instagram, Facebook, and Medium. The group also attempted to spread its narrative by posting comments on videos from legitimate users, often sharing links to its own content.

Despite the sophisticated use of AI technology, the campaign struggled to gain traction. Google reported that most of DRAGONBRIDGE’s YouTube channels had zero subscribers, and most of their videos received fewer than 100 views. Any engagement the content did receive was largely inauthentic, coming from other DRAGONBRIDGE accounts rather than real users.

This campaign represents a concerning trend in using artificial intelligence for political influence operations. While DRAGONBRIDGE has been experimenting with AI-generated content for several years, this recent effort marks a significant escalation in scale and sophistication.

Google has been actively working to counter DRAGONBRIDGE’s activities. In the first quarter of 2024 alone, the company disrupted over 10,000 instances of DRAGONBRIDGE activity across YouTube and Blogger. Since the network’s inception, Google has disrupted more than 175,000 instances of its activity.

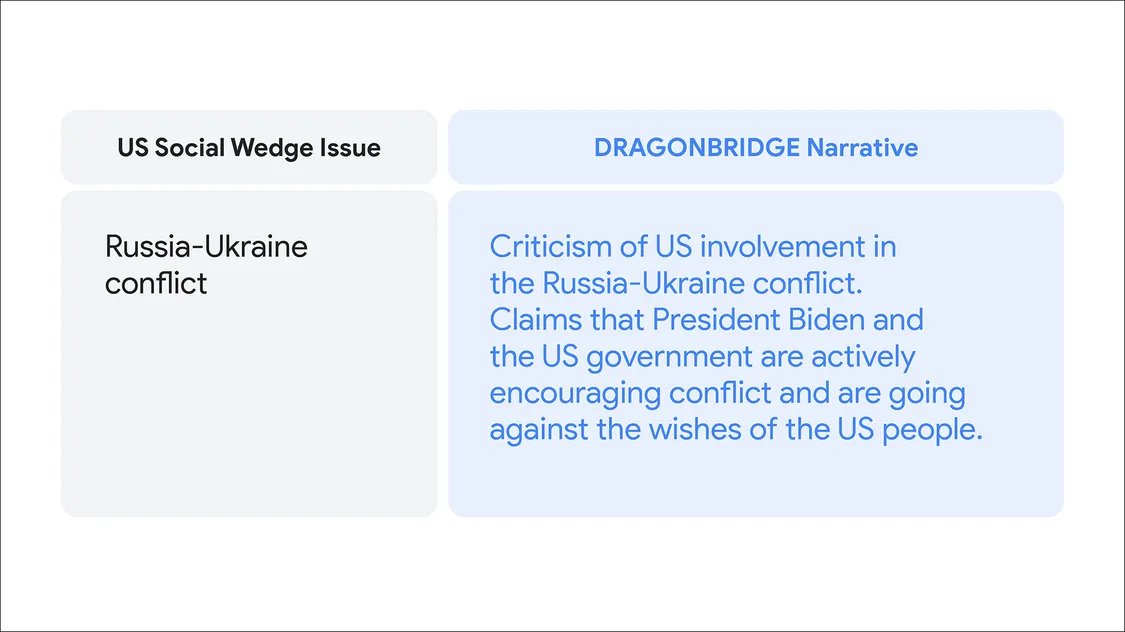

The group has previously created content around US elections and continues to produce narratives highlighting political divisions in the country.

As the 2024 US presidential election approaches, Google’s TAG is closely monitoring DRAGONBRIDGE for any shifts in focus or tactics related to US politics.

Last year, Mandiant, a security firm owned by Google, warned that malicious actors can leverage language models to craft tailored content for specific audiences, even without understanding the target group’s language.