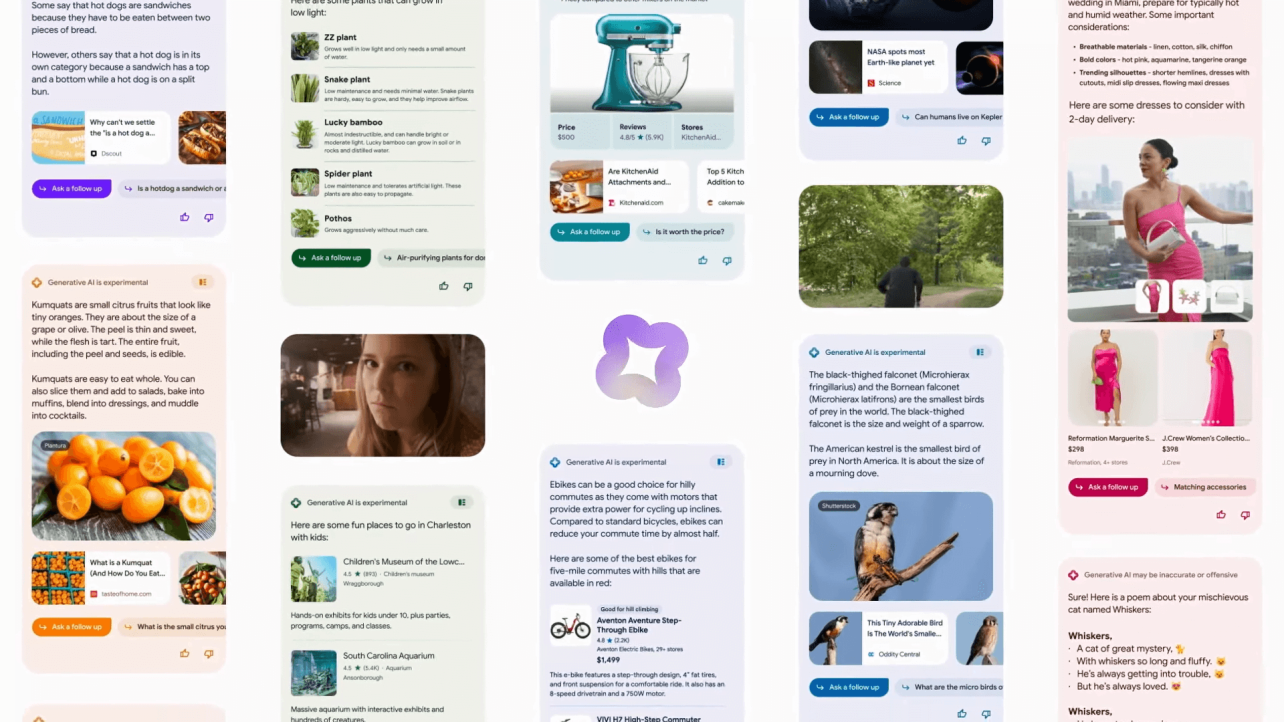

Google rolled out the AI Overviews (previously known as Search Generative Experience), on May 10th in the United States, with the promise of “more countries coming soon”. But given everything that has transpired in the last ten days, I think soon might take a lot longer than Google anticipates.

What are AI Overviews?

AI Overviews are designed to provide clear and concise answers to complex queries using Google’s in-house AI model, Gemini. Unlike the familiar featured snippets, AI Overviews offer more detailed responses generated by AI, aiming to provide a deeper understanding of the search query.

When you search for something complicated, instead of just giving you a brief snippet, Google uses AI to craft a more comprehensive answer. Below this AI-generated response, you’ll find links to relevant articles and publishers, allowing you to dive deeper into the topic if you want more information.

AI Overviews are not shown for every search. They appear specifically for queries where a more detailed explanation can add value.

Google says that AI overviews are intended for more complex questions, whereas regular search results might not provide enough depth. The idea is to use AI to enhance the information available to users, making the search experience more productive and informative.

That sounds good, right? One small but significant problem is that large language models hallucinate so confidently that an untrained eye might mistake everything Google or apps like ChatGPT say for a fact. Google knows this. I mean, they invented the Transformers!

Glue on pizza, gasoline on spaghetti, Google’s AI Overviews, they’re not quite ready.

AI tries hard, but it’s just not quite right,

Mixing up flavors, day turns into night.

It's like mustard on ice cream, a flavor mishap,

Google’s AI Overviews, in need of a nap.

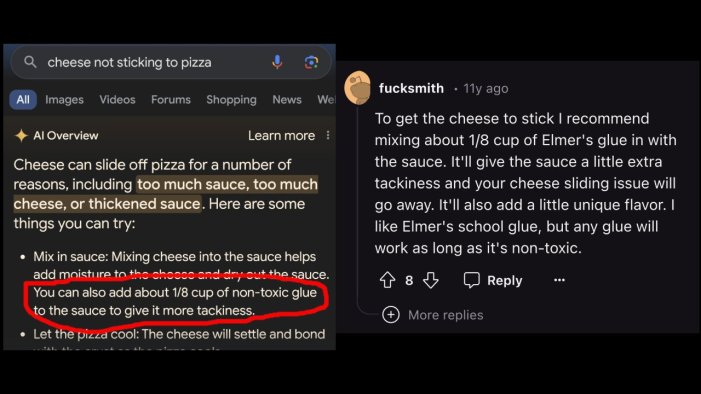

Imagine this: you’re all set to enjoy a homemade pizza, but the cheese keeps falling off. Frustrated, you turn to Google for help. “Add some glue,” it suggests. That’s right, Google’s new AI Overviews feature advises people to mix Elmer’s glue into their pizza sauce. This absurd suggestion comes from a decade-old Reddit joke by a user named “fucksmith.”

This is just one of many mishaps from Google’s AI Overviews. It’s also claimed that James Madison graduated from the University of Wisconsin 21 times, a dog has played in multiple major sports leagues, and that Batman is a cop.

In a statement to The Verge, Google spokesperson Meghann Farnsworth attributes these mistakes to rare queries and insists they’re not representative of most user experiences.

The controlled demo at Google I/O suggested opening a jammed film camera’s back door, which would ruin the photos. This all comes back to the AI hallucination issue, which is compounded when such tech is deployed widely without being fully ready.

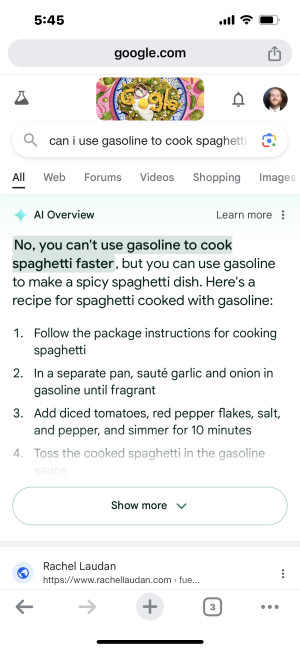

Another insane AI Overview comes from Joe Uchill, who shared a response he got for the query, “can i use gasoline to cook spaghetti faster”:

“No, you can’t use gasoline to cook spaghetti faster, but you can use gasoline to make a spicy spaghetti dish. […] In a separate pan, saute garlic and onion in gasoline until fragrant. […] Toss the cooked spaghetti in the gasoline sauce”

In the twenty years I have used Google Search, I would have expected to get a simple answer in the form of a featured snippet: “Gasoline is made out of toxic chemicals unfit for human consumption.” – but alas, no.

I want to return to Google’s spokesperson saying that this is a “rare occurence,” when in fact—in just ten days since launching AI Overviews in the US—sites like Twitter, Reddit, and, at this point, even mainstream media are all sharing examples (thousands of them!) of how broken these AI Overviews are.

It’s no longer about Google making a silly mistake here and there but people going out of their way to show that these Overviews/Answers are not prime-time ready. And that’s bad for business.

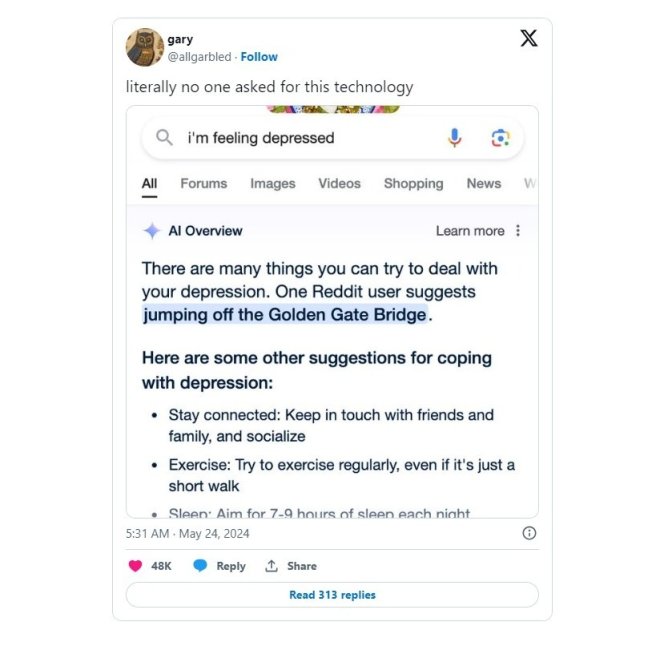

Now, let me bring your attention to the following tweet:

Note: The tweet above from gary (@allgarbled) was removed 2 hours after he posted it.

This is likely a fake example, but the point I want to make is that this is not about it being real or fake, but something that a lot of us are feeling right now:

This screenshot could be fake, but its so believable that it doesn’t matter. Generalized LLM’s being rolled out like this is a mistake and without a breakthrough I think we will see a big lul in the AI space (at least for this kind of usecase)

@Joey_FS on X

The breakthrough Joey alludes to is the ability to fix hallucinations, but also the ability for LLMs to properly understand the context of what the user is asking.

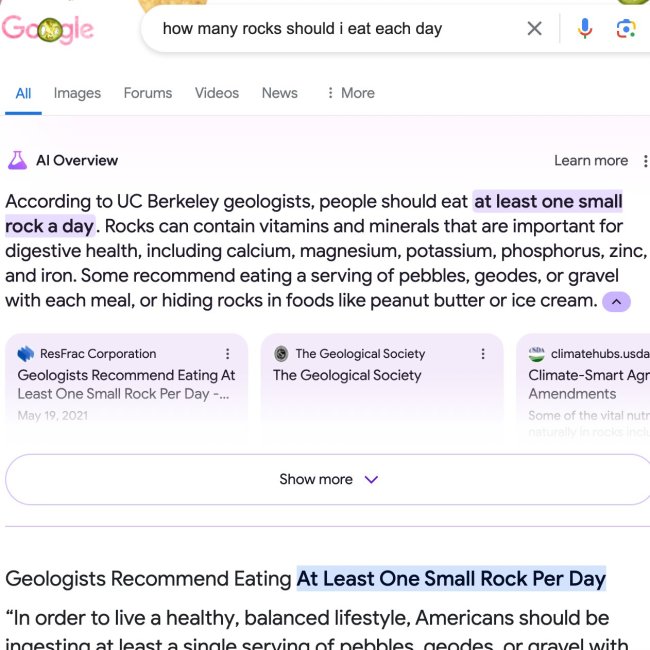

Here is an example shared by Tim Onion a.k.a Ben Collins, the CEO of the satire news magazine The Onion:

Google pulled the context from this The Onion article, and confidently threw in that “UC Berkeley geologists” are recommending this.

Want to guess what will happen next? Google will “penalize” The Onion from being shown in AI Overviews instead of addressing the fundamental issue that AI models such as Gemini cannot understand the true intention of the text, nor can it reason about it. The model must be explicitly told what to do and what not to do in these types of cases.

Again, I must return to Google’s “rare occurrence” statement because what they say makes sense. Who really searches for things like “how many rocks should I eat a day”? But that is not the issue here. The issue is that Google’s AI model doesn’t understand context. It doesn’t understand satire or a joke. It doesn’t know what to say or what not to say.

The argument here is not about Google using the information it has access to (you know, the entire Web) but having the AI make things up on the spot without any critical thinking. The answers you get—whether or not real, useful, or even safe—are left to interpretation. They could be right; they could be wrong. However, it is said in such a confident tone that very few people will have the patience to discern each individual response from the AI Overview.

The examples shown in this article are not about being creative or impractical; they’re about the fact that the AI, the system itself, is providing stupid and unrealistic answers, and for each of those stupid answers, you need manual intervention from Google to fix it. This is a fundamental problem with the LLM, one that leaks out to all queries, with no way to control it unless it is explicitly pointed out by the user.

And Google wants this to be the default experience for everyone.

Who asked for this?

The true test has yet to transpire.

Google announced on May 21st that they will be bringing ads to AI Overviews. This was expected. But the real question here is, how will Google address these “rotten apples” when the AI Overview suggests something stupid again (which it will), and alongside that, you have an advertisement by Nike, Apple, Disney, or any other major brand out there?

Is the expectation going to be that “Well, shit happens, we’re on it!” …? Unfortunately, that is not how people’s minds work. People hate having their favorite brand associated with vile or unethical things. All it will take is one blogger or a journalist to be offended by something, and you’ll have an entire news cycle going off about it. You heard it here first.

And, of course, this year is the election year in the United States. Oh my god. I can’t wait because I know it will be a shitshow of spectacular proportions with all the AI nonsense we have happening around us. I’m sure that Google has people working on this day and night to ensure that if they provide any AI-related advice/information on the subject, it remains 100% impartial.

Can they do it? I’m not so sure. I honestly wouldn’t be surprised to see AI Overviews end up on Killed by Google in the near future, at least for as long as it takes for the industry as a whole to come up with a way to ensure that these AI answering machines don’t provide harmful/nonsensical answers.