Can artificial intelligence write a funny comedy program? Researchers at Google DeepMind had comedians try it out, and the results reveal that AI still has a long way to go before it can match human wit.

In a study conducted at the Edinburgh Festival Fringe in August 2023 and online, twenty professional comedians spent time using large language models (LLMs) like ChatGPT and Google Bard (now Gemini) to write comedy material. The researchers aimed to evaluate whether these AI tools could serve as effective creative assistants for comedians and to explore the ethical implications of using AI in comedy writing.

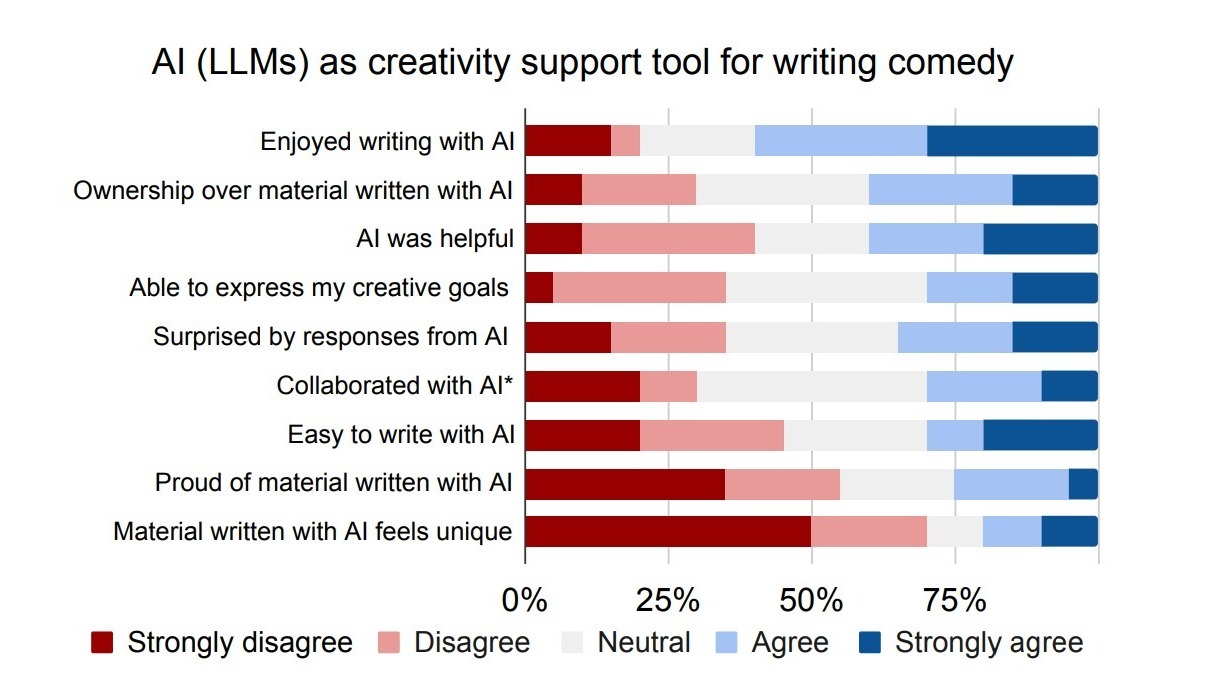

During three-hour workshops, the comedians experimented with using the LLMs to generate jokes, brainstorm ideas, and co-write comedy bits. They then filled out surveys to assess the AI’s performance as a creativity support tool and participated in focus group discussions to share their thoughts on the experience.

Unfortunately for the AI, most comedians found its attempts at humor to be underwhelming. They described the LLM-generated content as bland, biased, and full of outdated comedy tropes – “like cruise ship comedy from the 1950s, but a bit less racist,” as one participant colorfully put it. The AI struggled to produce truly original or surprising content, which is crucial for good comedy. Many participants noted that while the AI could sometimes generate passable setups, it often fell flat when it came to punchlines.

The comedians also ran into frustrating roadblocks when trying to write edgier material. The LLMs’ built-in content filters often blocked attempts to discuss topics like race, sexuality, or dark humor. While well-intentioned, this censorship felt overly restrictive to many participants who use provocative material in their acts. Some comedians pointed out that this moderation could inadvertently suppress important perspectives from marginalized communities.

A major issue that emerged was the LLMs’ lack of cultural context and lived experience. The comedians emphasized that great comedy often comes from a performer’s unique perspective and ability to read an audience. The AI, lacking any real-world knowledge or sense of timing, couldn’t replicate this crucial human element. As one participant noted, “Comedy is all about subtext, and a lot of that subtext can be unspoken, about who’s on stage, what environment they’re in.”

The study also highlighted concerns about data ownership and copyright. Many participants were aware of ongoing litigation regarding the use of copyrighted material to train AI models. Some worried about the potential for unintentional plagiarism when using AI-generated content, while others questioned the ethics of AI systems that can mimic a comedian’s distinctive style.

Despite these criticisms, the experiment wasn’t a total loss. Some comedians found the AI tools somewhat useful for basic tasks like brainstorming ideas or generating simple setups. A few participants saw potential in using AI as a collaborative tool, albeit one that requires significant human oversight and refinement.

The researchers used a Creativity Support Index (CSI) to quantify the AI’s performance as a writing aid. The results were mediocre, with an average score of 54.6 out of 100. Interestingly, participants who used the AI to generate non-English or multilingual text tended to rate it more favorably, suggesting that the tools might have different levels of effectiveness across languages.

For now, it seems human comedians don’t need to worry about losing their jobs to robot stand-ups. The study suggests that while AI might become a useful tool in a comedian’s arsenal, it’s unlikely to replace the unique creativity and perspective that human performers bring to the stage.