Several members of the U.S. House of Representatives, led by Rep. Adam Schiff, are demanding answers from Google about its AI Overviews feature. This tool, part of Google’s search engine, aims to provide users with AI-generated information summaries on various topics. However, recent controversies have spotlighted the feature due to its tendency to generate misleading and sometimes bizarre responses.

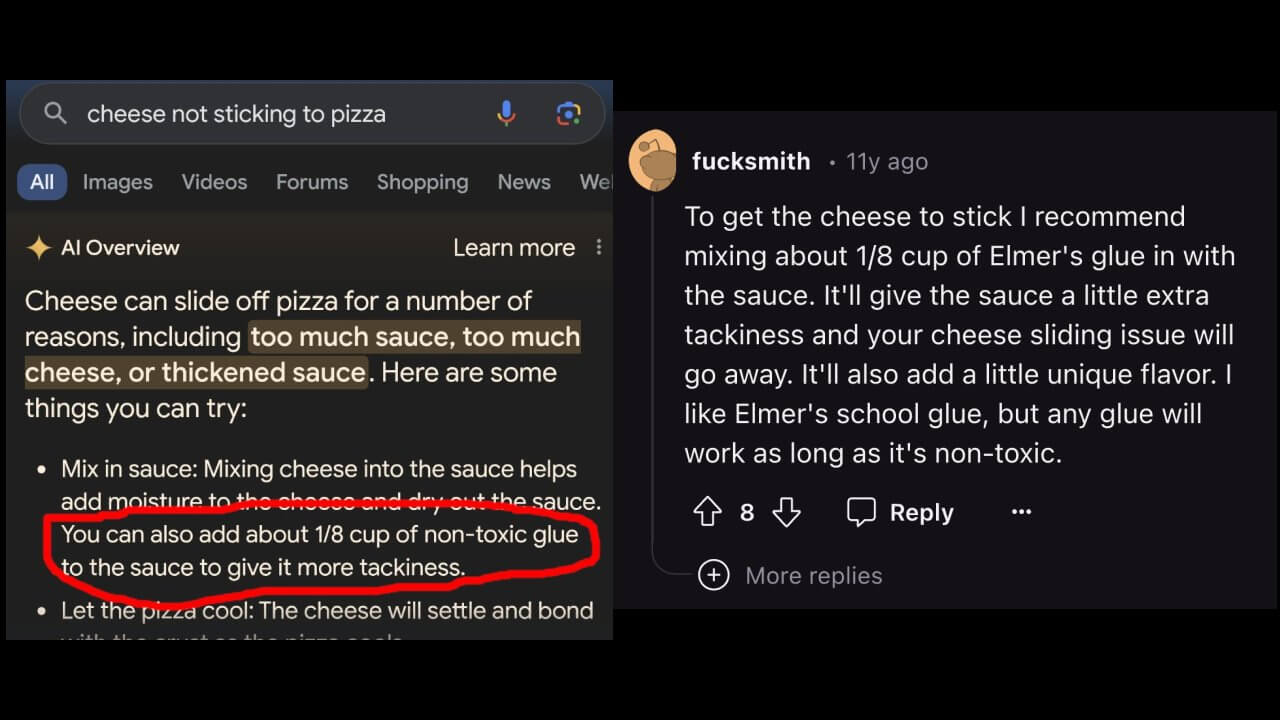

The AI Overviews feature uses Google’s Gemini AI model to synthesize information from highly-ranked web pages, offering users a summarized snapshot accompanied by relevant links. This approach aims to give users a quick, comprehensive overview of complex queries. However, the feature has been criticized for producing erroneous and misleading information, such as recommending the ingestion of rocks for vitamins and incorrectly stating that Barack Obama is a Muslim.

In a letter addressed to Google CEO Sundar Pichai, Schiff and other lawmakers expressed their concerns about the reliability and safety of the AI Overviews feature. The letter highlights instances where the AI pulled information from satirical sources or long-debunked conspiracy theories, thus providing users with false data. Schiff emphasizes the potential dangers of misinformation, especially given that many Americans rely on Google for news and health information.

The letter, also signed by Representatives Henry C. Johnson, Jr. (D-Ga.), Donald S. Beyer Jr. (D-Va.), Pramila Jayapal (D-Wash.), and Lori Trahan (D-Mass.) demand that Google provide comprehensive details on several key aspects of its AI Overviews feature.

These include the specific algorithms and criteria used to determine the accuracy of AI-generated content, the exact procedures for fact-checking and validating information, and the methods employed to identify and flag potential inaccuracies. Furthermore, the lawmakers request detailed explanations of how Google warns users about the risks of misinformation, the specific types of warning labels used, and the timeline and protocol for correcting or removing erroneous information once it has been reported.

Google has yet to respond directly to the letter, but it has previously acknowledged issues with the AI Overviews feature. In a blog post from May, Liz Reid, Google’s vice president and head of search, explained that AI sometimes struggles with queries that lack substantial high-quality data. Reid pointed out that unique or uncommon queries, like those involving satirical content, often lead the AI to pull from the few available sources, which may not always be reliable.

The concern extends beyond just the quality of information. There is also an ongoing debate about the financial impact on publishers. Google and other AI-driven search tools often summarize content from various sources without directing traffic back to the original publishers, potentially reducing their revenue. This issue has led to legislative efforts in places like California, where lawmakers are pushing for compensation for publishers when tech companies use their content.

Schiff and his colleagues’ questions underscore a larger concern about deploying AI technologies in public-facing applications. The inherent challenge lies in the AI’s ability to discern context and accurately filter information. Schiff’s previous efforts, including the Generative AI Copyright Disclosure Act, reflect a growing legislative interest in regulating AI technologies to ensure transparency and accountability.

The letter, signed off on July 17, expects Google to respond to the detailed questions posed by the lawmakers by August 1, 2024.