On July 19, 2024, a bug in CrowdStrike’s software sent shockwaves through the tech world, disrupting operations across major sectors globally. Microsoft revealed that 8.5 million Windows devices, primarily in large corporations, were rendered inoperable due to a faulty update. Airports, hospitals, banks, and government agencies felt the brunt of this massive outage, illustrating just how intertwined our digital infrastructure has become.

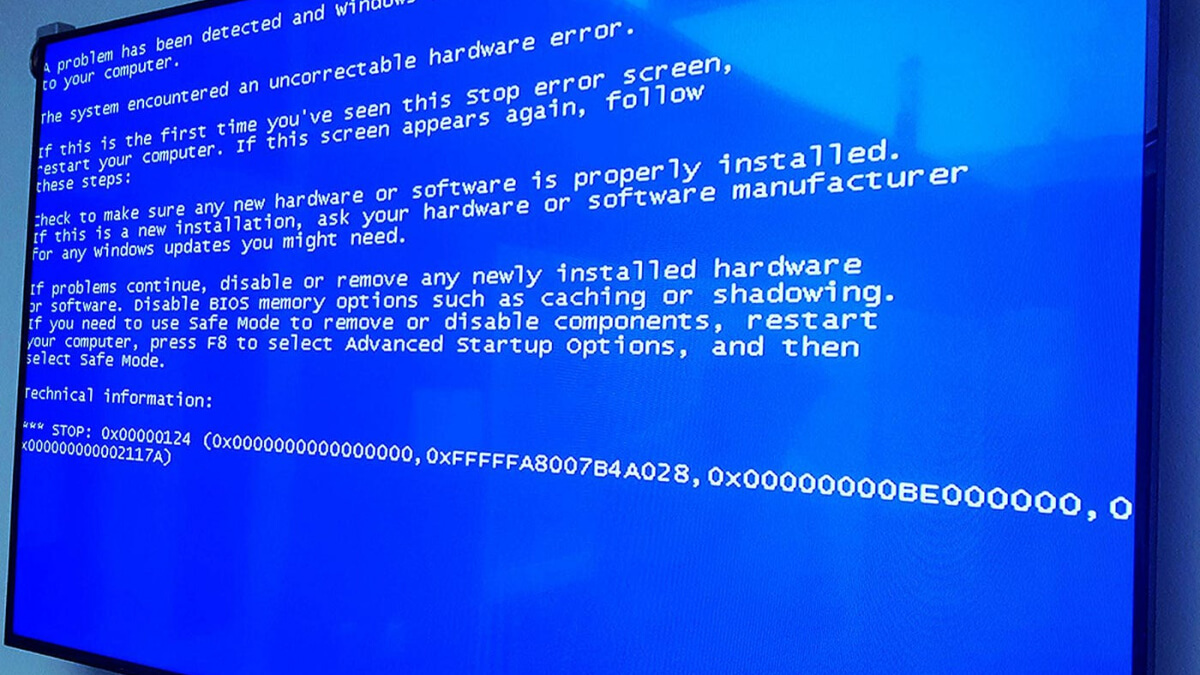

CrowdStrike’s Falcon Sensor, an Endpoint Detection and Response (EDR) system, is designed to safeguard against cyber threats. However, this time, it was the culprit. A defective kernel driver, which operates at the lowest level of a computer’s operating system, caused systems to crash into an endless boot loop. This meant that affected machines couldn’t complete their startup process and kept rebooting, essentially making them useless.

Kernel drivers have extensive permissions and few safeguards. When they fail, the results can be catastrophic. As one can imagine, fixing this isn’t as simple as releasing a new update. Many systems require manual intervention, often involving booting in safe mode—a special diagnostic mode that bypasses certain drivers and software—to remove the faulty code.

This isn’t the first time CrowdStrike has stumbled. A similar issue occurred with their software weeks prior, which incapacitated Linux systems running Debian and Rocky Linux. Users then accused CrowdStrike of insufficient testing and poor compatibility checks. It seems like a recurring issue for a company that counts 60% of Fortune 500 companies among its clientele.

The problem isn’t just with CrowdStrike. It’s a symptom of a broader issue in the tech industry. Many tech giants, driven by the pressure to grow and please shareholders, often cut corners. They outsource critical tasks, lay off experienced staff, and overwork their remaining employees, all of which can lead to serious oversights.

The incident at CrowdStrike is reminiscent of the Y2K scare, where a potential flaw in date processing threatened to cripple computer systems worldwide. However, while Y2K was averted through a massive coordinated effort, the CrowdStrike debacle exposes a different kind of vulnerability—one that’s systemic and cultural.

Our reliance on interconnected systems means that a single point of failure can have widespread repercussions. When these systems break, the fallout is immense. This isn’t just about the inconvenience of a blue screen; it’s about grounded flights, disrupted medical services, and halted financial transactions.

The root of the problem lies in the tech industry’s culture. Companies prioritize rapid growth and cost-cutting over quality and reliability. Silicon Valley’s mantra of “move fast and break things” has led to a mindset where risk management is seen as an obstacle rather than a necessity.

CrowdStrike, like many others, has been caught up in this culture. Employee reports highlight a toxic work environment where metrics matter more than relationships and management is disconnected from the realities of their products’ capabilities.

Microsoft isn’t blameless, either. They have stringent processes for vetting and signing kernel drivers, yet this update slipped through. This suggests that the problems at CrowdStrike are mirrored at Microsoft, where quality assurance may have been compromised by similar pressures to deliver quickly.

What happened with CrowdStrike should serve as a wake-up call. The tech industry needs to reevaluate its priorities. There must be a shift towards building robust, reliable systems and valuing the expertise of those who understand them. Companies should invest in thorough testing and maintenance, even if it means slower growth.

The stakes are too high to continue as we have. Our global infrastructure depends on the reliability of these systems. The need for robust, well-tested software becomes even more critical as we become more dependent on digital solutions. It’s time for the tech industry to grow up and take responsibility for the products it creates.

In the end, the cost of neglecting these responsibilities isn’t just financial. It’s the inconvenience and disruption to millions of lives. And if we don’t learn from incidents like these, we risk far more than just a few blue screens.