With the emergence of Midjourney, Stable Diffusion, and DALL-E, many AI tools to produce images based on text prompts have come to light. The results are often quite impressive, raising questions about our ability to distinguish truth from falsehood. These concerns are not new, as they were already prevalent when deepfakes emerged a few years ago.

In a recent Reddit thread, a 3D artist working for a small games company making mobile games is frustrated after the release of Midjourney v5, which can create characters in a matter of days, rendering his previous job obsolete.

While his work was of higher quality, the new version is helping his company save time and money. He goes on to express anger that AI is creating art that previously would have been his own creative expression but instead is using scraped internet content instead.

This also begs the question about the industry’s future, as leaving a company due to AI feels dystopian.

Over time, AI will undoubtedly become more sophisticated and harder to uncover. In this article, we share some tips for detecting images produced by AI.

Check if it’s indicated.

While some users may try to hide that an image was generated using AI, others are honest and indicate the real source of their image. Sometimes the purpose of using AI image generators is to demonstrate the tool’s performance, in which case it is necessary to indicate on which software the image was produced.

So, the first thing you should do is check if there’s a credit on the image. If the image is posted on a social network, the credit can be indicated in the comments of the publication.

Some image generators even offer a symbol to sign their work. For example, DALL-E adds a small multicolored watermark at the bottom right of the images.

Look for distortions in the image.

AI is far from perfect, and most generators still make easy-to-spot errors. Some elements of the image may be blurry, distorted, or disproportionate, and it’s not uncommon to see faces without noses or six-fingered hands.

In fact, human representations seem to be the most difficult to reproduce: teeth and (especially) eyes are often distorted in images or deep fake videos.

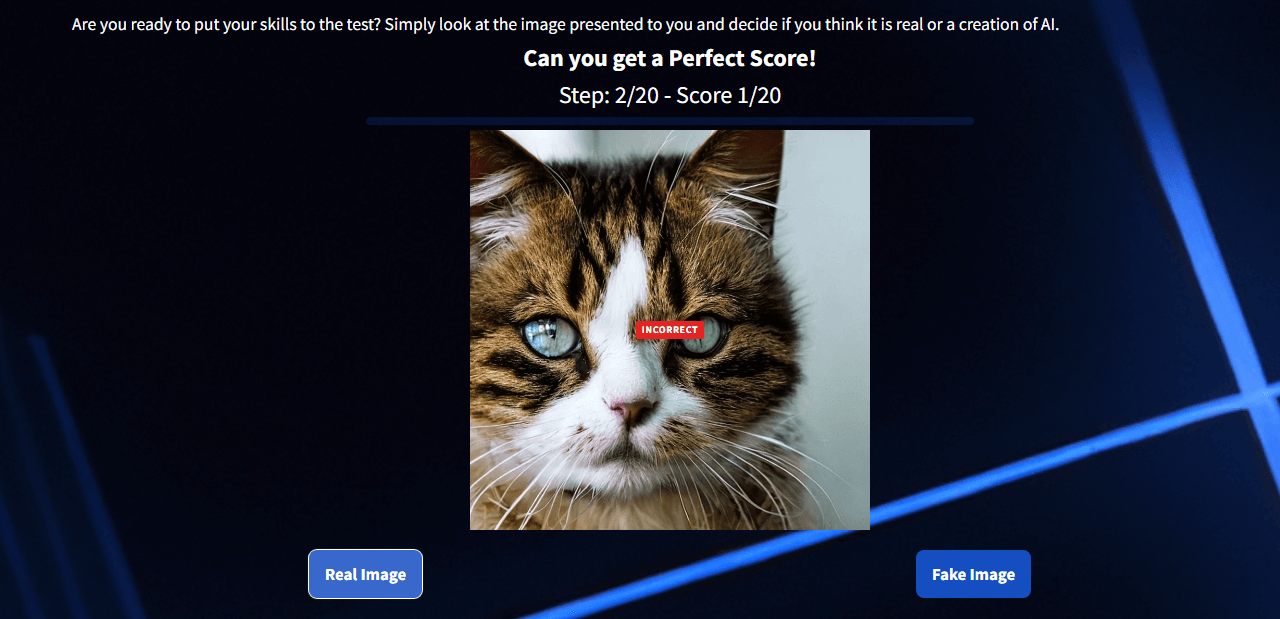

If you want to train yourself to spot AI-generated content, you can play the game “Real or AI?“. In this game, you have to guess which image is AI or Human generated over 20 images in total. You’ll see that it’s not very difficult, ignoring the fact that I mislabeled that cat picture.

Check for consistency

In many cases, AI produces fairly successful images, but the elements may not be well integrated with each other. For example, the different components of an image may adopt slightly different drawing styles.

Here are some details to analyze:

- Are the shadows and lighting realistic?

- Are the colors in the image consistent with each other?

- Do all the different elements have the same style or level of realism?

If you’ve ever taken a long-exposure shot of northern lights before, you may actually believe this is a real image and not generated with AI. But looking at the overall shadow composition, for example, in the middle of the mountain, there’s clearly the presence of distorted pixels that would not appear in a real image.

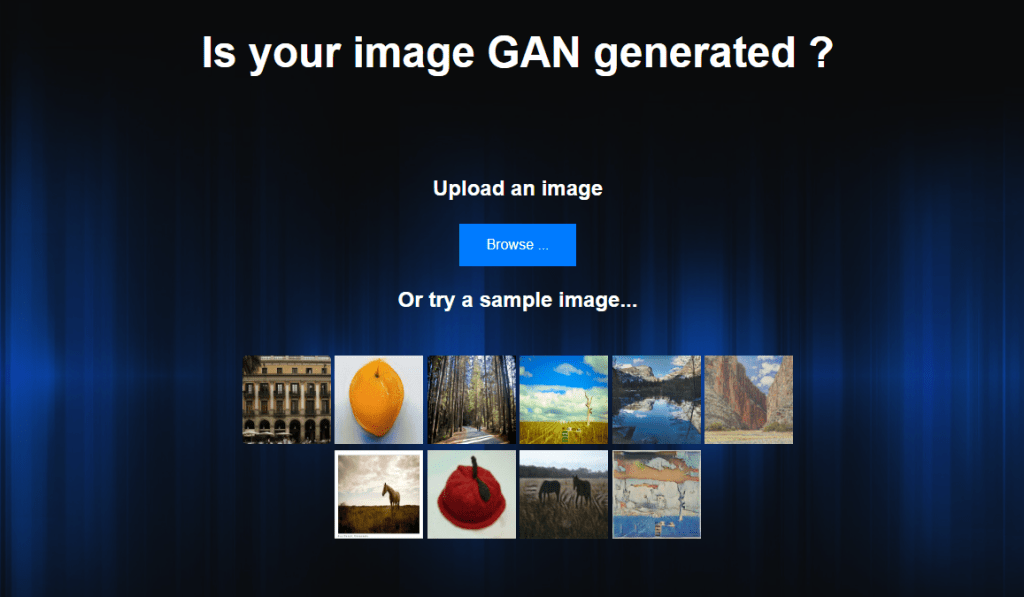

Use image recognition software.

Similar to text analyses following the emergence of ChatGPT, some solutions are being developed to detect if an image was produced by AI. At this time, not all solutions are effective: Mayachitra Inc’s GAN detector (this tool was working at the time of publishing this post, but has since disappeared), a company initially specializing in AI image recognition, still has a way to go to be reliable.

However, these tools will improve as they are trained. Furthermore, many well-known companies such as Microsoft or Intel have been working for a few years on deepfake recognition, and their expertise could be useful in developing new tools.

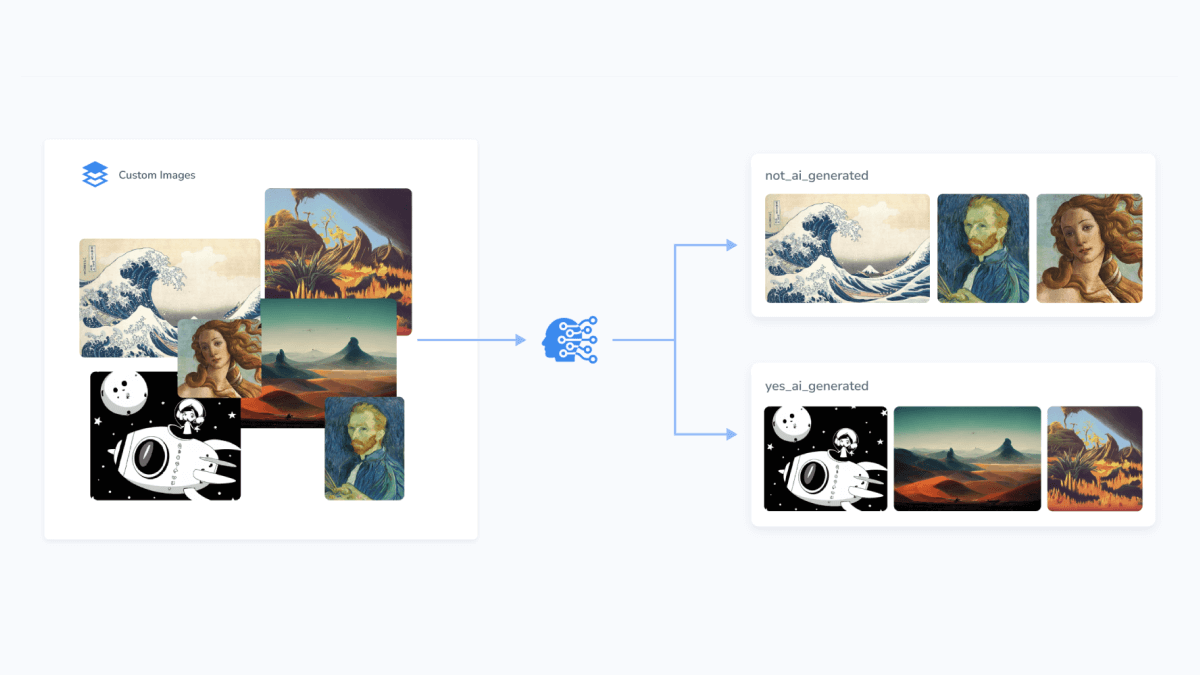

Hive, a company committed to providing enterprise customers with API-accessible solutions to moderation problems, has created a classification model called AI-Generated Media Recognition API that enables digital platforms to moderate AI-generated artwork without relying on users to flag images manually.

This model is designed to assist platforms that have banned AI-generated artwork for reasons such as keeping their sites focused exclusively on human-created art or copyright concerns.

Hive’s AI-Generated Media Recognition model is able to identify AI-created images among different types and styles of artwork, even correctly identifying AI artwork that could be misidentified by manual flagging. It can also identify the likely source engine the image was generated from.

Recognize different AI styles.

AI image generators are designed to be able to replicate a wide range of styles, such as a particular illustration style or the style of a famous painter. However, by default, these tools tend to adopt a particular style that can be recognizable to the trained eye.

By creating your own images using different AI generators, you can train yourself to identify recurring drawing styles or color palettes.